技能背景

咱们在对接Unity下推送模块的时候,遇到这样的技能诉求,开发者期望在Android的Unity场景下,获取到前后摄像头的数据,并投递到RTMP服务器,完成低推迟的数据收集处理。

在此之前,咱们已经有了十分成熟的RTMP推送模块,也完成了Android渠道Unity环境下的Camera场景收集,针对这个技能需求,有两种解决计划:

1. 经过针对原生android camera接口封装,翻开摄像头,并回调NV12|NV21数据,在Unity环境下渲染即可;

2. 经过WebCamTexture组件,经过系统接口,拿到数据,直接编码推送。

关于第一种计划,涉及到camera接口的二次封装和数据回调,也能够完成,可是不如WebCamTexture组件方便,本文首要介绍下计划2。

WebCamTexture

WebCamTexture继承自Texture,下面是官方材料介绍。

描绘

WebCam Texture 是实时视频输入渲染到的纹路。

静态变量

回来可用设备列表。

变量

经过此属性能够设置/获取摄像机的主动焦点。仅在 Android 和 iOS 设备上有用。

设置此属性可指定要运用的设备的名称。

视频缓冲区是否更新了此帧?

如果纹路根据深度数据,则此属性为 true。

回来摄像机当时是否正在运转。

设置摄像机设备的恳求的帧率(以每秒帧数为单位)。

设置摄像机设备的恳求的高度。

设置摄像机设备的恳求的宽度。

回来一个顺时针视点(以度为单位),能够运用此视点旋转多边形以使摄像机内容以正确的方向显现。

回来纹路图像是否垂直翻转。

结构函数

创建 WebCamTexture。

公共函数

回来坐标 (x, y) 上的像素色彩。

获取像素色彩块。

回来原始格式的像素数据。

Pause

暂停摄像机。

Play

发动摄像机。

Stop

中止摄像机。

技能完成

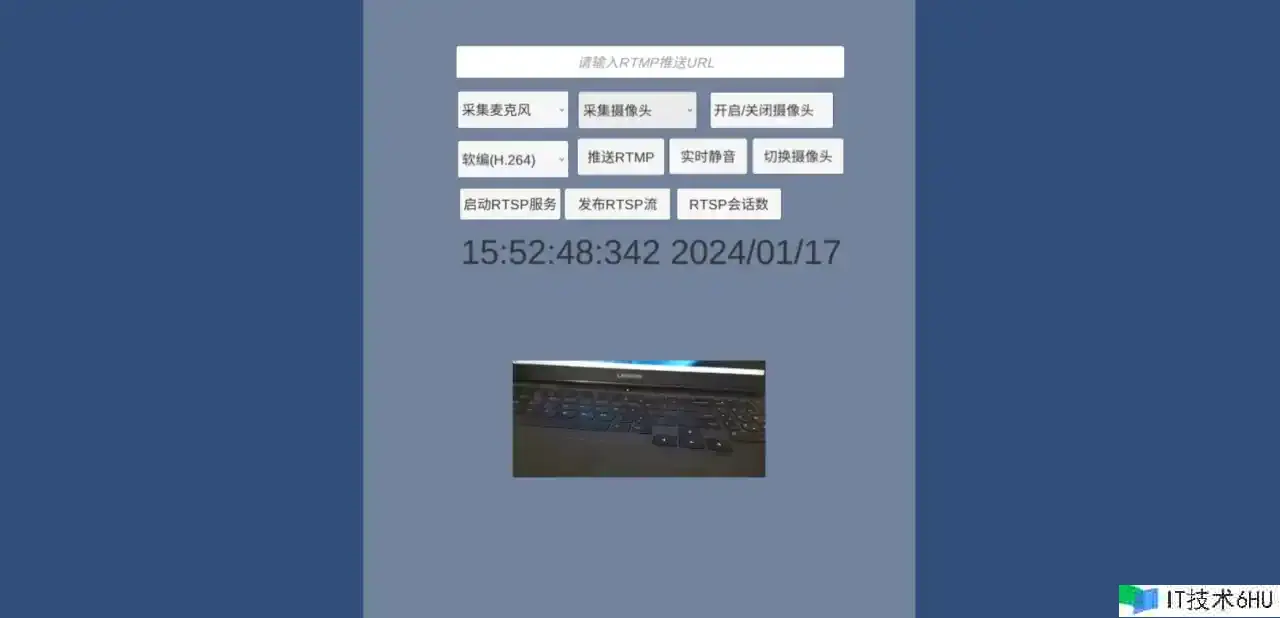

本文以大牛直播SDK的Unity下WebCamTexture收集推送为例,audio的话,能够收集麦克风,或许经过audioclip收集unity场景的audio,video数据的话,能够收集unity场景的camera,或许摄像头数据。

除此之外,还能够设置惯例的编码参数,比方软、硬编码,帧率码率关键帧等。

先说翻开摄像头:

public IEnumerator InitCameraCor()

{

// 恳求权限

yield return Application.RequestUserAuthorization(UserAuthorization.WebCam);

if (Application.HasUserAuthorization(UserAuthorization.WebCam) && WebCamTexture.devices.Length > 0)

{

// 创建相机贴图

web_cam_texture_ = new WebCamTexture(WebCamTexture.devices[web_cam_index_].name, web_cam_width_, web_cam_height_, fps_);

web_cam_raw_image_.texture = web_cam_texture_;

web_cam_texture_.Play();

}

}

前后摄像头切换

private void SwitchCamera()

{

if (WebCamTexture.devices.Length < 1)

return;

if (web_cam_texture_ != null && web_cam_texture_.isPlaying)

{

web_cam_raw_image_.enabled = false;

web_cam_texture_.Stop();

web_cam_texture_ = null;

}

web_cam_index_++;

web_cam_index_ = web_cam_index_ % WebCamTexture.devices.Length;

web_cam_texture_ = new WebCamTexture(WebCamTexture.devices[web_cam_index_].name, web_cam_width_, web_cam_height_, fps_);

web_cam_raw_image_.texture = web_cam_texture_;

web_cam_raw_image_.enabled = true;

web_cam_texture_.Play();

}

发动|中止RTMP

private void OnPusherBtnClicked()

{

if (is_pushing_rtmp_)

{

if(!is_rtsp_publisher_running_)

{

StopCaptureAvData();

if (coroutine_ != null) {

StopCoroutine(coroutine_);

coroutine_ = null;

}

}

StopRtmpPusher();

btn_pusher_.GetComponentInChildren<Text>().text = "推送RTMP";

}

else

{

bool is_started = StartRtmpPusher();

if(is_started)

{

btn_pusher_.GetComponentInChildren<Text>().text = "中止RTMP";

if(!is_rtsp_publisher_running_)

{

StartCaptureAvData();

coroutine_ = StartCoroutine(OnPostVideo());

}

}

}

}

推送RTMP完成如下:

public bool StartRtmpPusher()

{

if (is_pushing_rtmp_)

{

Debug.Log("已推送..");

return false;

}

//获取输入框的url

string url = input_url_.text.Trim();

if (!is_rtsp_publisher_running_)

{

InitAndSetConfig();

}

if (pusher_handle_ == 0) {

Debug.LogError("StartRtmpPusher, publisherHandle is null..");

return false;

}

NT_PB_U3D_SetPushUrl(pusher_handle_, rtmp_push_url_);

int is_suc = NT_PB_U3D_StartPublisher(pusher_handle_);

if (is_suc == DANIULIVE_RETURN_OK)

{

Debug.Log("StartPublisher success..");

is_pushing_rtmp_ = true;

}

else

{

Debug.LogError("StartPublisher failed..");

return false;

}

return true;

}

对应的InitAndSetConfig()完成如下:

private void InitAndSetConfig()

{

if ( java_obj_cur_activity_ == null )

{

Debug.LogError("[daniusdk.com]getApplicationContext is null");

return;

}

int audio_opt = 1;

int video_opt = 3;

video_width_ = camera_.pixelWidth;

video_height_ = camera_.pixelHeight;

pusher_handle_ = NT_PB_U3D_Open(audio_opt, video_opt, video_width_, video_height_);

if (pusher_handle_ != 0){

Debug.Log("NT_PB_U3D_Open success");

NT_PB_U3D_Set_Game_Object(pusher_handle_, game_object_);

}

else

{

Debug.LogError("NT_PB_U3D_Open failed!");

return;

}

int fps = 30;

int gop = fps * 2;

if(video_encoder_type_ == (int)PB_VIDEO_ENCODER_TYPE.VIDEO_ENCODER_HARDWARE_AVC)

{

int h264HWKbps = setHardwareEncoderKbps(true, video_width_, video_height_);

h264HWKbps = h264HWKbps * fps / 25;

Debug.Log("h264HWKbps: " + h264HWKbps);

int isSupportH264HWEncoder = NT_PB_U3D_SetVideoHWEncoder(pusher_handle_, h264HWKbps);

if (isSupportH264HWEncoder == 0) {

NT_PB_U3D_SetNativeMediaNDK(pusher_handle_, 0);

NT_PB_U3D_SetVideoHWEncoderBitrateMode(pusher_handle_, 1); // 0:CQ, 1:VBR, 2:CBR

NT_PB_U3D_SetVideoHWEncoderQuality(pusher_handle_, 39);

NT_PB_U3D_SetAVCHWEncoderProfile(pusher_handle_, 0x08); // 0x01: Baseline, 0x02: Main, 0x08: High

// NT_PB_U3D_SetAVCHWEncoderLevel(pusher_handle_, 0x200); // Level 3.1

// NT_PB_U3D_SetAVCHWEncoderLevel(pusher_handle_, 0x400); // Level 3.2

// NT_PB_U3D_SetAVCHWEncoderLevel(pusher_handle_, 0x800); // Level 4

NT_PB_U3D_SetAVCHWEncoderLevel(pusher_handle_, 0x1000); // Level 4.1 大都情况下,这个够用了

//NT_PB_U3D_SetAVCHWEncoderLevel(pusher_handle_, 0x2000); // Level 4.2

// NT_PB_U3D_SetVideoHWEncoderMaxBitrate(pusher_handle_, ((long)h264HWKbps)*1300);

Debug.Log("Great, it supports h.264 hardware encoder!");

}

}

else if(video_encoder_type_ == (int)PB_VIDEO_ENCODER_TYPE.VIDEO_ENCODER_HARDWARE_HEVC)

{

int hevcHWKbps = setHardwareEncoderKbps(false, video_width_, video_height_);

hevcHWKbps = hevcHWKbps*fps/25;

Debug.Log("hevcHWKbps: " + hevcHWKbps);

int isSupportHevcHWEncoder = NT_PB_U3D_SetVideoHevcHWEncoder(pusher_handle_, hevcHWKbps);

if (isSupportHevcHWEncoder == 0) {

NT_PB_U3D_SetNativeMediaNDK(pusher_handle_, 0);

NT_PB_U3D_SetVideoHWEncoderBitrateMode(pusher_handle_, 0); // 0:CQ, 1:VBR, 2:CBR

NT_PB_U3D_SetVideoHWEncoderQuality(pusher_handle_, 39);

// NT_PB_U3D_SetVideoHWEncoderMaxBitrate(pusher_handle_, ((long)hevcHWKbps)*1200);

Debug.Log("Great, it supports hevc hardware encoder!");

}

}

else

{

if (is_sw_vbr_mode_) //H.264 software encoder

{

int is_enable_vbr = 1;

int video_quality = CalVideoQuality(video_width_, video_height_, true);

int vbr_max_bitrate = CalVbrMaxKBitRate(video_width_, video_height_);

vbr_max_bitrate = vbr_max_bitrate * fps / 25;

NT_PB_U3D_SetSwVBRMode(pusher_handle_, is_enable_vbr, video_quality, vbr_max_bitrate);

//NT_PB_U3D_SetSWVideoEncoderSpeed(pusher_handle_, 2);

}

}

NT_PB_U3D_SetAudioCodecType(pusher_handle_, 1);

NT_PB_U3D_SetFPS(pusher_handle_, fps);

NT_PB_U3D_SetGopInterval(pusher_handle_, gop);

if (audio_push_type_ == (int)PB_AUDIO_OPTION.AUDIO_OPTION_MIC_EXTERNAL_PCM_MIXER

|| audio_push_type_ == (int)PB_AUDIO_OPTION.AUDIO_OPTION_TWO_EXTERNAL_PCM_MIXER)

{

NT_PB_U3D_SetAudioMix(pusher_handle_, 1);

}

else

{

NT_PB_U3D_SetAudioMix(pusher_handle_, 0);

}

}

数据投递

Color32[] cam_texture = web_cam_texture_.GetPixels32();

int rowStride = web_cam_texture_.width * 4;

int length = rowStride * web_cam_texture_.height;

NT_PB_U3D_OnCaptureVideoRGBA32Data(pusher_handle_, (long)Color32ArrayToIntptr(cam_texture), length, rowStride, web_cam_texture_.width, web_cam_texture_.height,

1, 0, 0, 0, 0);

中止RTMP推送

private void StopRtmpPusher()

{

if(!is_pushing_rtmp_)

return;

NT_PB_U3D_StopPublisher(pusher_handle_);

if(!is_rtsp_publisher_running_)

{

NT_PB_U3D_Close(pusher_handle_);

pusher_handle_ = 0;

NT_PB_U3D_UnInit();

}

is_pushing_rtmp_ = false;

}

轻量级RTSP服务的接口封装,之前blog已多次提到,这儿不再赘述。

总结

Unity场景下收集摄像头数据并编码打包推送到RTMP服务器或轻量级RTSP服务,收集获取数据不费事,首要难点在于需求控制投递到原生模块的帧率,比方设置30帧,实际收集到的数据是50帧,需求均匀的处理数据投递,到达既流畅推迟又低。合作SmartPlayer播映测试,无论是RTMP推送还是轻量级RTSP服务出来的数据,整体都在毫秒级推迟,感兴趣的开发者,能够跟我沟通交流测试。