ChatGPT 引发了大语言模型的热潮,但是其封闭的生态也带来了许多限制。所幸Meta却是越来越开放,自LLaMA发布以来,环绕它微谐和开发的模型越来越多,其中尤其是UC伯克利大学的研究人员联合其它几家研究机构一起推出的羊羔驼(Vicuna),仅用少数的数据微调(费用约为300美元),就达到了ChatGPT 90%的功能。2023年9月又发布了根据LLaMA 2微调的1.5版别,最高支撑16K上下文输入,并且根据LLaMA2的可商用授权协议,羊羔驼(Vicuna)也能够免费商用,实在是企业或者个人研究的必备良知平替。本文将梳理在本地布置和运用Vicuna的具体教程。

装置 Vicuna 结构 – FastChat

- 运用pip装置

pip3 install "fschat[model_worker,webui]"

- 从源码装置

git clone https://github.com/lm-sys/FastChat.git

cd FastChat

pip3 install --upgrade pip # enable PEP 660 support

pip3 install -e ".[model_worker,webui]"

假如后续还有对结构的定制开发,主张从源码装置。

下载 Vicuna 模型

目前最新版是根据LLaMA 2微调的 v1.5,能够运用如下指令,假如模型未下载将自动下载。也可从Hugging Face下载模型,然后在指令中再指定模型路径。

| Size | Chat Command | Hugging Face Repo |

|---|---|---|

| 7B | python3 -m fastchat.serve.cli --model-path lmsys/vicuna-7b-v1.5 |

lmsys/vicuna-7b-v1.5 |

| 7B-16k | python3 -m fastchat.serve.cli --model-path lmsys/vicuna-7b-v1.5-16k |

lmsys/vicuna-7b-v1.5-16k |

| 13B | python3 -m fastchat.serve.cli --model-path lmsys/vicuna-13b-v1.5 |

lmsys/vicuna-13b-v1.5 |

| 13B-16k | python3 -m fastchat.serve.cli --model-path lmsys/vicuna-13b-v1.5-16k |

lmsys/vicuna-13b-v1.5-16k |

| 33B | python3 -m fastchat.serve.cli --model-path lmsys/vicuna-33b-v1.3 |

lmsys/vicuna-33b-v1.3 |

指令行谈天

$ CUDA_VISIBLE_DEVICES=1 python -m fastchat.serve.cli --model-path models/vicuna-7b-v1.5/

Loading checkpoint shards: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:08<00:00, 4.40s/it]

USER: Who are you?

ASSISTANT: I am Vicuna, a language model trained by researchers from Large Model Systems Organization (LMSYS).

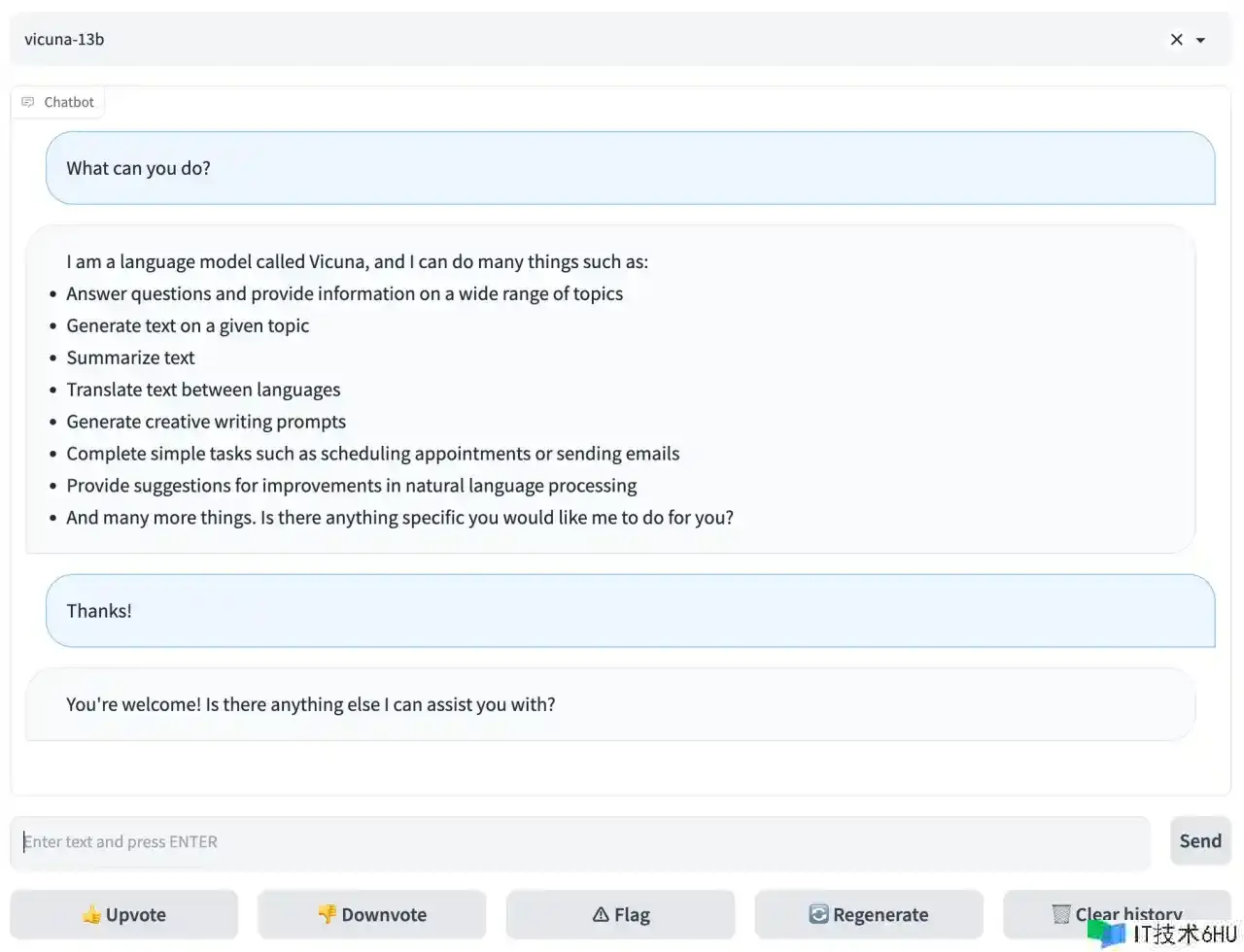

网页版谈天

要运用网页版谈天,需求运转三个模块:

- Controller:

python -m fastchat.serve.controller - Worker:

python -m fastchat.serve.model_worker --model-path models/vicuna-13b - WEB服务器:

python -m fastchat.serve.gradio_web_server

微调

UC伯克利大学的研究人员运用了4 x A100 (40GB),我用4块A100-SXM4-80GB也微调成功了。

torchrun --nproc_per_node=4 --master_port=20001 fastchat/train/train_mem.py

--model_name_or_path meta-llama/Llama-2-7b-hf

--data_path data/dummy_conversation.json

--bf16 True

--output_dir output_vicuna

--num_train_epochs 3

--per_device_train_batch_size 2

--per_device_eval_batch_size 2

--gradient_accumulation_steps 16

--evaluation_strategy "no"

--save_strategy "steps"

--save_steps 1200

--save_total_limit 10

--learning_rate 2e-5

--weight_decay 0.

--warmup_ratio 0.03

--lr_scheduler_type "cosine"

--logging_steps 1

--fsdp "full_shard auto_wrap"

--fsdp_transformer_layer_cls_to_wrap 'LlamaDecoderLayer'

--tf32 True

--model_max_length 2048

--gradient_checkpointing True

--lazy_preprocess True

RESTful API Server

要运转 RESTful API server,需求运转三个模块:

- Controller:

python -m fastchat.serve.controller - Worker:

python -m fastchat.serve.model_worker --model-path models/vicuna-13b - WEB服务器:

python -m fastchat.serve.openai_api_server --host 0.0.0.0 --port 8000

要调用API有两种方法:

- OpenAI SDK

这种调用方法需求先装置openai库:pip install --upgrade openai

import openai

openai.api_key = "EMPTY"

openai.base_url = "http://localhost:8000/v1/"

model = "vicuna-7b-v1.5"

prompt = "Once upon a time"

# create a completion

completion = openai.completions.create(model=model, prompt=prompt, max_tokens=64)

# print the completion

print(prompt + completion.choices[0].text)

# create a chat completion

completion = openai.chat.completions.create(

model=model,

messages=[{"role": "user", "content": "Hello! What is your name?"}]

)

# print the completion

print(completion.choices[0].message.content)

- HTTP

这种调用方法能够用requests库,以下仅用curl演示:

curl http://localhost:8000/v1/chat/completions

-H "Content-Type: application/json"

-d '{

"model": "vicuna-7b-v1.5",

"messages": [{"role": "user", "content": "Hello! What is your name?"}]

}'

词嵌入

获取词嵌入的API接口:

curl http://localhost:8000/v1/embeddings

-H "Content-Type: application/json"

-d '{

"model": "vicuna-7b-v1.5",

"input": "Hello world!"

}'

关于运用词嵌入的运用场景,官方提供了一些根底事例如下:

- 计算文本相似度:test_sentence_similarity.py

- 文本分类:test_classification.py

- 查找相关文本:test_semantic_search.py

Vicuna + LangChain 完成本地知识库问答体系

官方举例:

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import TextLoader

from langchain.embeddings import OpenAIEmbeddings

from langchain.indexes import VectorstoreIndexCreator

embedding = OpenAIEmbeddings(model="text-embedding-ada-002")

loader = TextLoader("state_of_the_union.txt")

index = VectorstoreIndexCreator(embedding=embedding).from_loaders([loader])

llm = ChatOpenAI(model="gpt-3.5-turbo")

questions = [

"Who is the speaker",

"What did the president say about Ketanji Brown Jackson",

"What are the threats to America",

"Who are mentioned in the speech",

"Who is the vice president",

"How many projects were announced",

]

for query in questions:

print("Query:", query)

print("Answer:", index.query(query, llm=llm))

LangChain支撑加载多种格式(HTML、CSV、JSON、MARKDOWN、PDF)的本地知识库,所以能够根据实际场景,调整以上示例中的loader,即可完成一个简略的本地知识库问答体系。

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。