我司运用Apache DolphinScheduler作为调度结构很久了,感兴趣的小伙伴能够看看这些干货文章:

因为之前监控到会呈现重复的调度的问题,所以此文记载排查重复调度问题的全过程,期望对社区其他的小伙伴能够起到抛砖引玉的效果!

注:本文运用的DolphinScheduler 3.1.1的版别。

数据库运用的Tidb数据库,版别6.5.0,数据库的阻隔等级是默许的RR(Read Committed)。

Quartz版别为2.3.2,存储形式为JDBC。运用的CronTrigger,设置了org.quartz.jobStore.acquireTriggersWithinLock=true

先说定论。Tidb和Mysql在RR阻隔等级下,敞开事务后,创立Read view的机遇不同,导致Tidb会呈现Quartz重复调度的问题。

一、问题发生

前一段时刻,客户给咱们报了重复调度的问题。

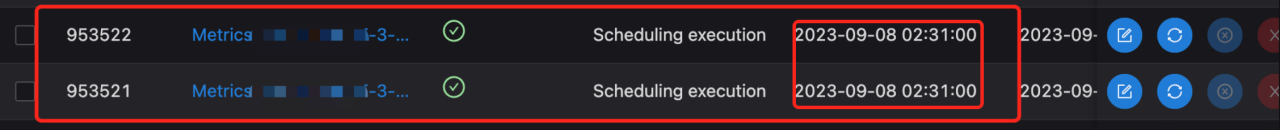

可见下图,同名同调度时刻的使命被履行了两次,id相差1。还不是单个偶发状况,根本每一到两天都会呈现。对于调度结构,呈现重复调度的问题是不可接受的。

二、问题定位

第一步便是定位问题,Apache DolphinScheduler的底层调度结构是Quartz。首要要明晰问题的原因,是Quartz的问题仍是DolphinScheduler的问题。

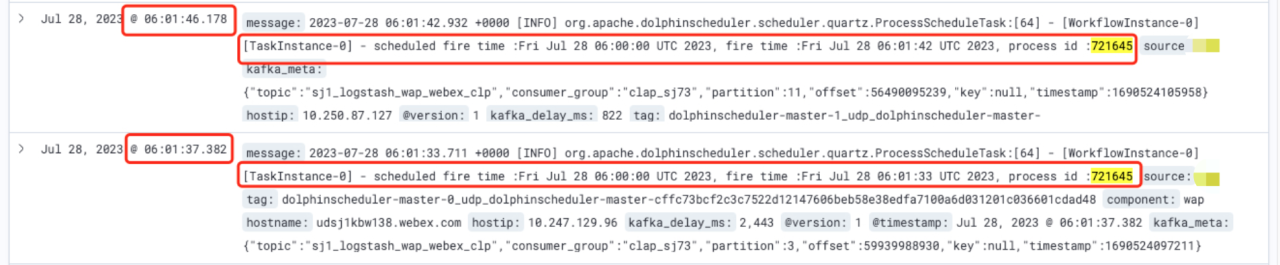

经过排查日志,能够看到是Quartz这边的问题。

那问题很明晰了,Quartz这边呈现了重复调度,其时就觉得很古怪,因为这个结构十分稳定了,上一次发版别仍是在Oct 24, 2019。

很多调度结构的底层运用的都是Quartz,比方XXL-Job等。可是日志这边的确很明晰的看出来的确Quartz重复触发了同一个使命。

网上关于Quartz重复调度的问题的处理方案都是加锁

设置 org.quartz.jobStore.acquireTriggersWithinLock=true

可是DolphinScheduler这边默许在配置文件中就现已设置了这个参数。

比较扎手,不太好排查。网上也没有太多相关的材料。

其时置疑是不是数据库的问题,比方锁失效,多个线程拿到了同一把锁,说实话感觉这个或许性不大,可是的确没想到其他的或许。

所以就先看Quartz的源码和调度逻辑,这种问题一般需要了解更多的底层知识才干发现问题。在看源码的时分也看到了这么一篇博客,写的十分明晰,有十分好的参阅价值,十分感谢作者。

咱们首要讲解一下Quartz里面一些关于调度的概念,后边会用到。

- Quartz中心调度主要是QRTZ_TRIGGERS这张表。Quartz把一次调度称之为一次Fire。QRTZ_TRIGGERS这张表里有几个比较中心的字段,TRIGGER_NAME(调度使命名)、PREV_FIRE_TIME(上次调度时刻)、NEXT_FIRE_TIME(下次调度时刻)、TRIGGER_STATE(其时状况)。

- QRTZ_FIRED_TRIGGERS,这张表存储的正在触发的调度信息。

Quartz调度过程简化

- 加锁

- 获取即将fire的triggers列表,这里有一个概念,比方其时时刻是9.59,他或许会获取下次调度时刻为9.57-10.02之间的trrigers,防止漏调度的问题

- 更新QRTZ_TRIGGERS的状况从WAITING->ACQUAIRED

- 将trigger信息insert进QRTZ_FIRED_TRIGGERS表,状况为ACQUAIRED

- 开释锁

等到真正开始调度

- 加锁

- 检查QRTZ_TRIGGERS状况是否为ACQUAIRED

- 如果是,QRTZ_FIRED_TRIGGERS状况更改为EXECUTING

- 更新QRTZ_TRIGGERS的next_fire_time

- 将QRTZ_TRIGGERS状况更新为WAITING

- 开释锁

1、敞开MVCC日志

在看源码的一起,也联系DBA是否能够坚持DB这边所有的sql以供排查。

DBA这边反馈坚持所有的sql会占有很多的磁盘空间,可是能够先敞开MVCC日志,也便是能够切换到不同的时刻点,去检查其时数据库里面的状况。也很有用。

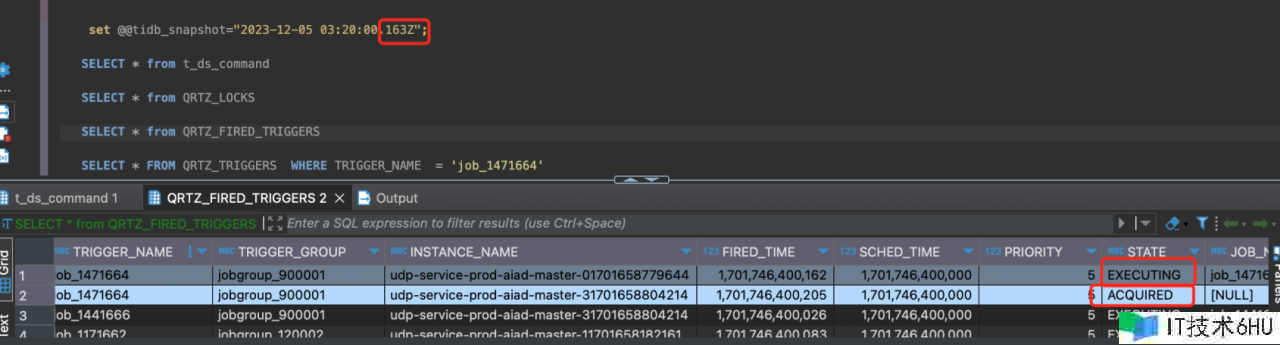

进过排查能够看见,针对于同一条调度,QRTZ_FIRED_TRIGGERS呈现了两条记载,愈加印证了重复调度是因为Quartz引起的。

可是拿不到具体履行了哪些sql,单纯看MVCC没有太多的价值。

2、敞开全量日志

再次跟DBA交流后,给咱们搭建了一个新集群,仅供测验运用,能够敞开全量日志。

有了全量日志就十分方便排查了。

[2024/02/28 18:45:20.141 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251623] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042348020498438] [forUpdateTS=448042348020498438] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO t_ds_process_instance ( process_definition_code, process_definition_version, state, state_history, recovery, start_time, run_times, name, host, command_type, command_param, task_depend_type, max_try_times, failure_strategy, warning_type, warning_group_id, schedule_time, command_start_time, executor_id, is_sub_process, history_cmd, process_instance_priority, worker_group, environment_code, timeout, tenant_id, next_process_instance_id, dry_run, restart_time ) VALUES ( 12316168402080, 1, 1, '[{"time":"2024-02-28 18:45:20","state":"RUNNING_EXECUTION","desc":"init running"},{"time":"2024-02-28 18:45:20","state":"RUNNING_EXECUTION","desc":"start a new process from scheduler"}]', 0, '2024-02-28 18:45:20.007', 1, 'shell-1-20240228184520007', 'udp-service-dev-aiad-master-1.udp-service-dev-aiad-master-headless.wap-udp-dev.svc.aiadgen-int-1:5678', 6, '{"schedule_timezone":"Asia/Shanghai"}', 2, 0, 1, 0, 0, '2024-02-28 18:43:08.0', '2024-02-28 18:45:17.0', 810004, 0, 'SCHEDULER', 2, 'default', -1, 0, -1, 0, 0, '2024-02-28 18:45:20.007' )"]

[2024/02/28 18:45:26.185 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251629] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042349606469635] [forUpdateTS=448042349606469635] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO t_ds_process_instance ( process_definition_code, process_definition_version, state, state_history, recovery, start_time, run_times, name, host, command_type, command_param, task_depend_type, max_try_times, failure_strategy, warning_type, warning_group_id, schedule_time, command_start_time, executor_id, is_sub_process, history_cmd, process_instance_priority, worker_group, environment_code, timeout, tenant_id, next_process_instance_id, dry_run, restart_time ) VALUES ( 12316168402080, 1, 1, '[{"time":"2024-02-28 18:45:26","state":"RUNNING_EXECUTION","desc":"init running"},{"time":"2024-02-28 18:45:26","state":"RUNNING_EXECUTION","desc":"start a new process from scheduler"}]', 0, '2024-02-28 18:45:26.05', 1, 'shell-1-20240228184526050', 'udp-service-dev-aiad-master-0.udp-service-dev-aiad-master-headless.wap-udp-dev.svc.aiadgen-int-1:5678', 6, '{"schedule_timezone":"Asia/Shanghai"}', 2, 0, 1, 0, 0, '2024-02-28 18:43:08.0', '2024-02-28 18:45:24.0', 810004, 0, 'SCHEDULER', 2, 'default', -1, 0, -1, 0, 0, '2024-02-28 18:45:26.05' )"]

经过日志咱们能够看到呈现了重复调度,预计调度时刻是 2024-02-28 18:43:08.0

咱们需要找出跟调度相关的日志,QRTZ_FIRED_TRIGGERS和QRTZ_TRIGGERS。

第一次调度相关日志:

[2024/02/28 18:45:08.250 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251625] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042343682015234] [forUpdateTS=448042344638840833] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="UPDATE QRTZ_TRIGGERS SET JOB_NAME = 'job_1201640', JOB_GROUP = 'jobgroup_1200004', DESCRIPTION = null, NEXT_FIRE_TIME = 1709145788000, PREV_FIRE_TIME = 1709145784000, TRIGGER_STATE = 'WAITING', TRIGGER_TYPE = 'CRON', START_TIME = 1709114081000, END_TIME = 4861267200000, CALENDAR_NAME = null, MISFIRE_INSTR = 1, PRIORITY = 5 WHERE SCHED_NAME = 'DolphinScheduler' AND TRIGGER_NAME = 'job_1201640' AND TRIGGER_GROUP = 'jobgroup_1200004'"]

[2024/02/28 18:45:13.348 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251625] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042345307308035] [forUpdateTS=448042345897132034] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO QRTZ_FIRED_TRIGGERS (SCHED_NAME, ENTRY_ID, TRIGGER_NAME, TRIGGER_GROUP, INSTANCE_NAME, FIRED_TIME, SCHED_TIME, STATE, JOB_NAME, JOB_GROUP, IS_NONCONCURRENT, REQUESTS_RECOVERY, PRIORITY) VALUES('DolphinScheduler', 'udp-service-dev-aiad-master-017091137217721709113723285', 'job_1201640', 'jobgroup_1200004', 'udp-service-dev-aiad-master-01709113721772', 1709145913303, 1709145788000, 'ACQUIRED', null, null, 0, 0, 5)"]

[2024/02/28 18:45:20.141 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251623] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042348020498438] [forUpdateTS=448042348020498438] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO t_ds_process_instance ( process_definition_code, process_definition_version, state, state_history, recovery, start_time, run_times, name, host, command_type, command_param, task_depend_type, max_try_times, failure_strategy, warning_type, warning_group_id, schedule_time, command_start_time, executor_id, is_sub_process, history_cmd, process_instance_priority, worker_group, environment_code, timeout, tenant_id, next_process_instance_id, dry_run, restart_time ) VALUES ( 12316168402080, 1, 1, '[{"time":"2024-02-28 18:45:20","state":"RUNNING_EXECUTION","desc":"init running"},{"time":"2024-02-28 18:45:20","state":"RUNNING_EXECUTION","desc":"start a new process from scheduler"}]', 0, '2024-02-28 18:45:20.007', 1, 'shell-1-20240228184520007', 'udp-service-dev-aiad-master-1.udp-service-dev-aiad-master-headless.wap-udp-dev.svc.aiadgen-int-1:5678', 6, '{"schedule_timezone":"Asia/Shanghai"}', 2, 0, 1, 0, 0, '2024-02-28 18:43:08.0', '2024-02-28 18:45:17.0', 810004, 0, 'SCHEDULER', 2, 'default', -1, 0, -1, 0, 0, '2024-02-28 18:45:20.007' )"]

[2024/02/28 18:45:16.730 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251625] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042346552492033] [forUpdateTS=448042346853957637] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="UPDATE QRTZ_TRIGGERS SET JOB_NAME = 'job_1201640', JOB_GROUP = 'jobgroup_1200004', DESCRIPTION = null, NEXT_FIRE_TIME = 1709145792000, PREV_FIRE_TIME = 1709145788000, TRIGGER_STATE = 'WAITING', TRIGGER_TYPE = 'CRON', START_TIME = 1709114081000, END_TIME = 4861267200000, CALENDAR_NAME = null, MISFIRE_INSTR = 1, PRIORITY = 5 WHERE SCHED_NAME = 'DolphinScheduler' AND TRIGGER_NAME = 'job_1201640' AND TRIGGER_GROUP = 'jobgroup_1200004'"]

第2次调度相关日志:

[2024/02/28 18:45:18.454 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251605] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042345936453636] [forUpdateTS=448042347509317637] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="SELECT TRIGGER_NAME, TRIGGER_GROUP, NEXT_FIRE_TIME, PRIORITY FROM QRTZ_TRIGGERS WHERE SCHED_NAME = 'DolphinScheduler' AND TRIGGER_STATE = 'WAITING' AND NEXT_FIRE_TIME <= 1709145941638 AND (MISFIRE_INSTR = -1 OR (MISFIRE_INSTR != -1 AND NEXT_FIRE_TIME >= 1709145618319)) ORDER BY NEXT_FIRE_TIME ASC, PRIORITY DESC"]

[2024/02/28 18:45:19.281 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251605] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042345936453636] [forUpdateTS=448042347509317637] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="UPDATE QRTZ_TRIGGERS SET TRIGGER_STATE = 'ACQUIRED' WHERE SCHED_NAME = 'DolphinScheduler' AND TRIGGER_NAME = 'job_1201640' AND TRIGGER_GROUP = 'jobgroup_1200004' AND TRIGGER_STATE = 'WAITING'"]

[2024/02/28 18:45:19.497 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251605] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042345936453636] [forUpdateTS=448042347509317637] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO QRTZ_FIRED_TRIGGERS (SCHED_NAME, ENTRY_ID, TRIGGER_NAME, TRIGGER_GROUP, INSTANCE_NAME, FIRED_TIME, SCHED_TIME, STATE, JOB_NAME, JOB_GROUP, IS_NONCONCURRENT, REQUESTS_RECOVERY, PRIORITY) VALUES('DolphinScheduler', 'udp-service-dev-aiad-master-117091136300541709113631821', 'job_1201640', 'jobgroup_1200004', 'udp-service-dev-aiad-master-11709113630054', 1709145919453, 1709145788000, 'ACQUIRED', null, null, 0, 0, 5)"]

[2024/02/28 18:45:26.185 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251629] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042349606469635] [forUpdateTS=448042349606469635] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="INSERT INTO t_ds_process_instance ( process_definition_code, process_definition_version, state, state_history, recovery, start_time, run_times, name, host, command_type, command_param, task_depend_type, max_try_times, failure_strategy, warning_type, warning_group_id, schedule_time, command_start_time, executor_id, is_sub_process, history_cmd, process_instance_priority, worker_group, environment_code, timeout, tenant_id, next_process_instance_id, dry_run, restart_time ) VALUES ( 12316168402080, 1, 1, '[{"time":"2024-02-28 18:45:26","state":"RUNNING_EXECUTION","desc":"init running"},{"time":"2024-02-28 18:45:26","state":"RUNNING_EXECUTION","desc":"start a new process from scheduler"}]', 0, '2024-02-28 18:45:26.05', 1, 'shell-1-20240228184526050', 'udp-service-dev-aiad-master-0.udp-service-dev-aiad-master-headless.wap-udp-dev.svc.aiadgen-int-1:5678', 6, '{"schedule_timezone":"Asia/Shanghai"}', 2, 0, 1, 0, 0, '2024-02-28 18:43:08.0', '2024-02-28 18:45:24.0', 810004, 0, 'SCHEDULER', 2, 'default', -1, 0, -1, 0, 0, '2024-02-28 18:45:26.05' )"]

能够看出呈现线程联系,也便是第一次调度彻底结束之后,呈现了第2次调度。

比较疑问的点在于第一次调度后现已更新了QRTZ_TRIGGERS的next fire time,可是第2次调度在select 之后触发的调度跟第一次调度的调度时刻相同。

咱们拿不到sql的履行成果,可是经过日志剖析,第2次调度在履行select 获取需要调度的使命时,返回的成果跟第一次调度时返回的成果相同。

十分古怪。其时置疑是不是数据库的主从同步有问题,导致第2次调度获取的数据是更新前的数据。

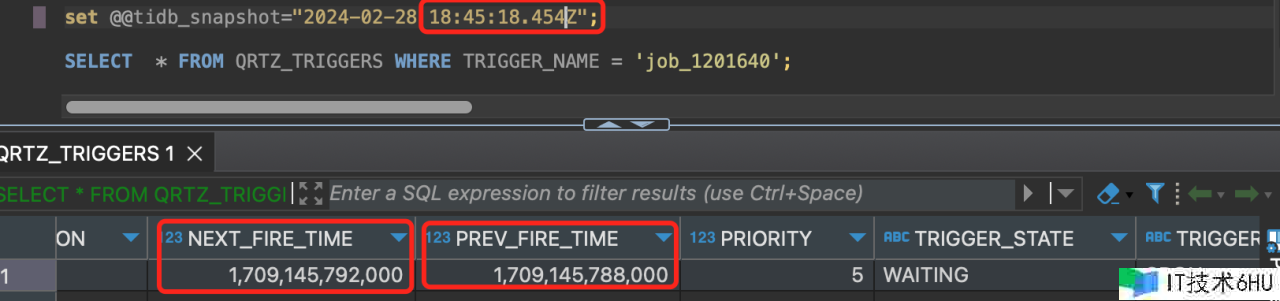

可是经过mvcc检查:

能够显着的看出来,第2次调度的时分,数据库的值更新过的,不存在主从同步的问题。

并且从日志来看,是串行履行的,也便是获取锁没问题,提到获取锁,那么看看加锁开释锁是怎样个流程。

3、检查锁记载

conn能够简单理解为进程号。成果出乎意料,第2次调度的进程在45分11秒就测验获取锁了,45分18秒才获取到锁。中间等待了7秒。

[2024/02/28 18:45:11.772 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251605] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=0] [forUpdateTS=0] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="SELECT * FROM QRTZ_LOCKS WHERE SCHED_NAME = 'DolphinScheduler' AND LOCK_NAME = 'TRIGGER_ACCESS' FOR UPDATE"]

[2024/02/28 18:45:18.544 00:00] [INFO] [session.go:3749] [GENERAL_LOG] [conn=2452605821289251605] [user=udp@10.249.34.78] [schemaVersion=421] [txnStartTS=448042345936453636] [forUpdateTS=448042347509317637] [isReadConsistency=false] [currentDB=udp] [isPessimistic=true] [sessionTxnMode=PESSIMISTIC] [sql="SET SQL_SELECT_LIMIT=DEFAULT"]

那第一次调度进程什么拿到锁的,所以梳理了一下两个进程请求开释锁的联系。

第一次调度的进程号简称为625,第2次调度的进程号简称为605。

18:45:09.427 625 请求锁

18:45:11.772 605 请求锁 -> 阻塞

18:45:12.210 625拿到锁

625 履行调度逻辑

625 18:45:16.730 履行完,更新triggers 表

18:45:17.287 625开释锁

18:45:17.928 625请求锁

18:45:18.363 605拿到锁

605 履行调度逻辑

看到这里,就有个猜想,605在拿到锁的时分查询QRTZ_TRIGGERS的成果跟625是相同的。

4、复现问题

那咱们能够复现这个流程。

首要创立表。

CREATE TABLE `QRTZ_LOCKS` (

`SCHED_NAME` varchar(120) NOT NULL,

`LOCK_NAME` varchar(40) NOT NULL,

PRIMARY KEY (`SCHED_NAME`,`LOCK_NAME`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

CREATE TABLE `t_ds_version` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`version` varchar(200) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `version_UNIQUE` (`version`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='version';

INSERT INTO udp.t_ds_version (version) VALUES

('1');

随后敞开两个会话,按照如下次序测验

Tidb

| Process1 | Process2 |

|---|---|

| start TRANSACTION; | |

| start TRANSACTION; | |

SELECT * FROM QRTZ_LOCKS WHERE SCHED_NAME = ‘DolphinScheduler’ AND LOCK_NAME = ‘TRIGGER_ACCESS’ FOR UPDATE; 其时读

|

|

SELECT * FROM QRTZ_LOCKS WHERE SCHED_NAME = ‘DolphinScheduler’ AND LOCK_NAME = ‘TRIGGER_ACCESS’ FOR UPDATE; 其时读

|

|

UPDATE t_ds_version set version = ‘2’ where 1 = 1; |

|

| commit; | |

| select * from t_ds_version; `快照读1 | |

version = 1 |

Process2的select * from t_ds_version;读取到的version=1。

而同样的操作在MySQL上履行时,Process2读取到的却是version=2。

该问题是因为MySQL和Tidb敞开事务时创立Read view的机遇不同导致的。

Mysql 在RR阻隔等级下,同一个事务中的

第一个快照读才会创立Read View,之后的快照读读取的都是同一个Read View。

没有搜到Tidb对于这块的相关文档,可是经过现象来看则是在敞开事务的一起就创立了Read View。 关于Mysql的相关文档能够参阅MySQL InnoDB的MVCC实现机制。

那说明应该便是这个不同导致了重复调度的问题。

5、问题复盘

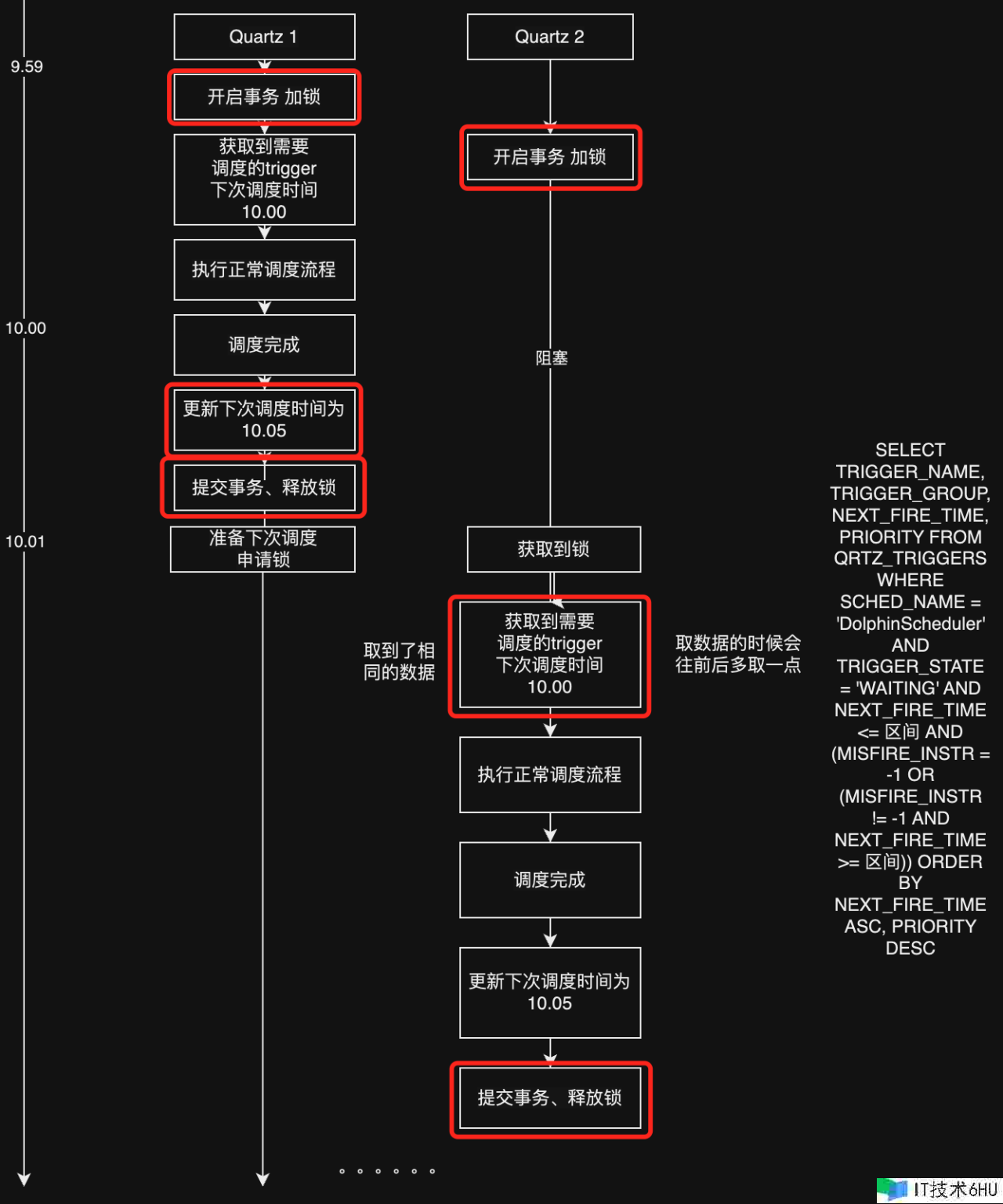

咱们假设一种状况,详见下图。

两个服务器一先一后敞开事务,然后抢占锁,拿到锁后履行调度的逻辑。

如同所示,就呈现了重复调度的状况。只看红框里面的逻辑,就跟最上面咱们模拟在Tidb和Mysql中履行的相同,服务器2在Tidb数据库的状况下,拿到锁之后读取的是9.59时刻下的Read View,所以也正常触发了应该10.00调度的使命。呈现了重复的调度的状况。甚至或许呈现重复调度三次四次的状况,如果在极端巧合的状况下。

三、处理方案

切换Mysql数据库,或许Tidb数据库更改到RC阻隔等级。

关于为什么Tidb和Mysql在RR阻隔等级下有差异,能够Track