源码地址

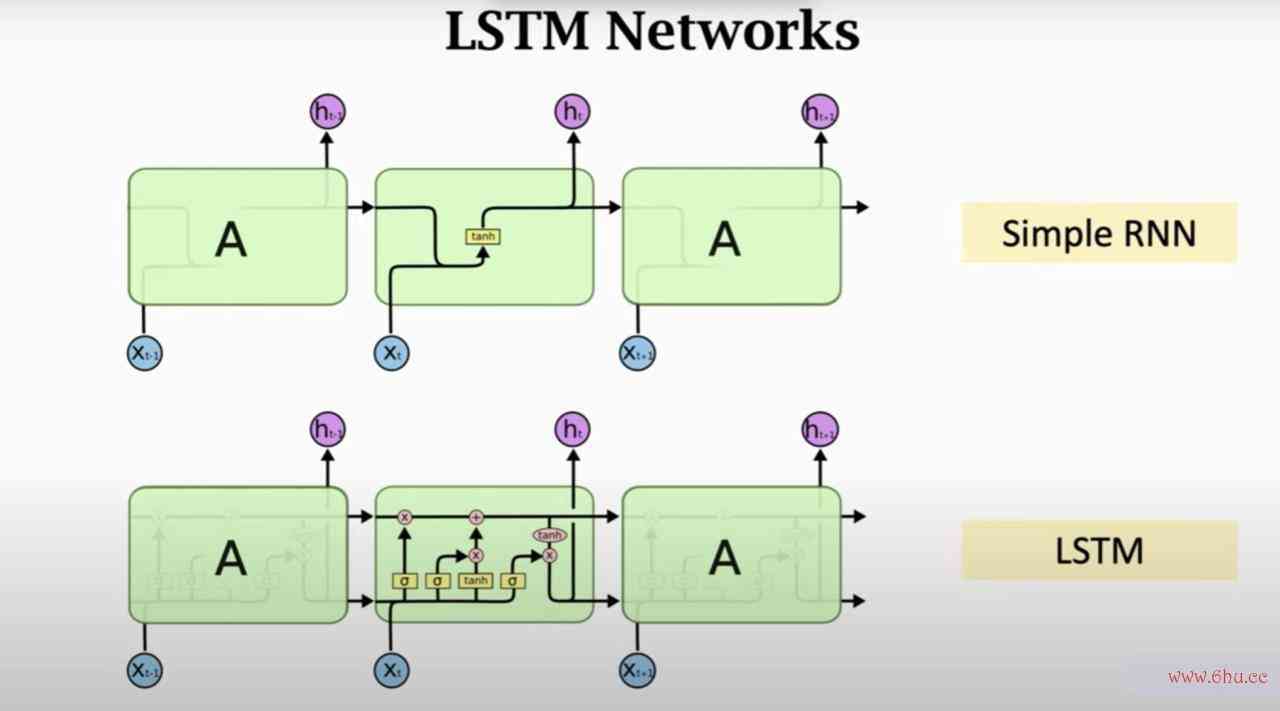

LSTM模型简介

如果不想看原理的可以略过,直接看下面的代码部分。LSTM具体实现原理网上有很多,这里就不具体讲了。

长短期记忆模型(long-shor梯度下降法原理t term m算法的特征emory)是一种特殊的RNN模型,是为了解决RNN模型梯度弥散的问题而提出的;在传统的RNN中,训练算法使用的是BPTT,当时间比较长时,算法的空间复杂度是指需要回传的残差会指数下降,导致工商银行网络权重更新缓慢,无法体现出RNN的长期记忆的效果,因此需要一个存储单元来存储记忆,因此Lgithub中文社区STM模型被提出

代码

1.构建中文词典tokenizer

class NameTokenizer():

def __init__(self, path, required_gender="男"):

self.names = []

self._tokens = []

self._tokens += ['0', 'n']

with open(path, mode='r', encoding="utf-8-sig") as f:

for idx, line in enumerate(f.readlines()):

line = line.strip()

line = line.split(",")

gender = line[1]

if gender == required_gender or gender == "未知":

name = line[0]

self.names.append(name)

for char in name:

self._tokens.append(char)

# 创建词典 token->id映射关系

self._tokens = sorted(list(set(self._tokens)))

self.token_id_dict = dict((c, i) for i, c in enumerate(self._tokens))

self.id_token_dict = dict((i, c) for i, c in enumerate(self._tokens))

self.vocab = len(self._tokens)

2.构建训练数据集

def generate_data(tokenizer: NameTokenizer):

total = len(tokenizer.names)

while True:

for start in range(0, total, BATCH_SIZE):

end = min(start + BATCH_SIZE, total)

sequences = []

next_chars = []

for name in tokenizer.names[start:end]:

s = name + (MAX_LEN - len(name))*'0'

sequences.append(s)

next_chars.append('n')

for it, j in enumerate(name):

if (it >= len(name)-1):

continue

s = name[:-1-it]+(MAX_LEN - len(name[:-1-it]))*'0'

sequences.append(s)

next_chars.append(name[-1-it])

# print(sequences[:10])

# print(next_chars[:10])

x_train = np.zeros((len(sequences), MAX_LEN, tokenizer.vocab))

y_train = np.zeros((len(next_chars), tokenizer.vocab))

for idx, seq in enumerate(sequences):

for t, char in enumerate(seq):

x_train[idx, t, tokenizer.token_id_dict[char]] = 1

for idx, char in enumerate(next_chars):

y_train[idx, tokenizer.token_id_dict[char]] = 1

yield x_train, y_train

del sequences,next_chars,x_train,y_train

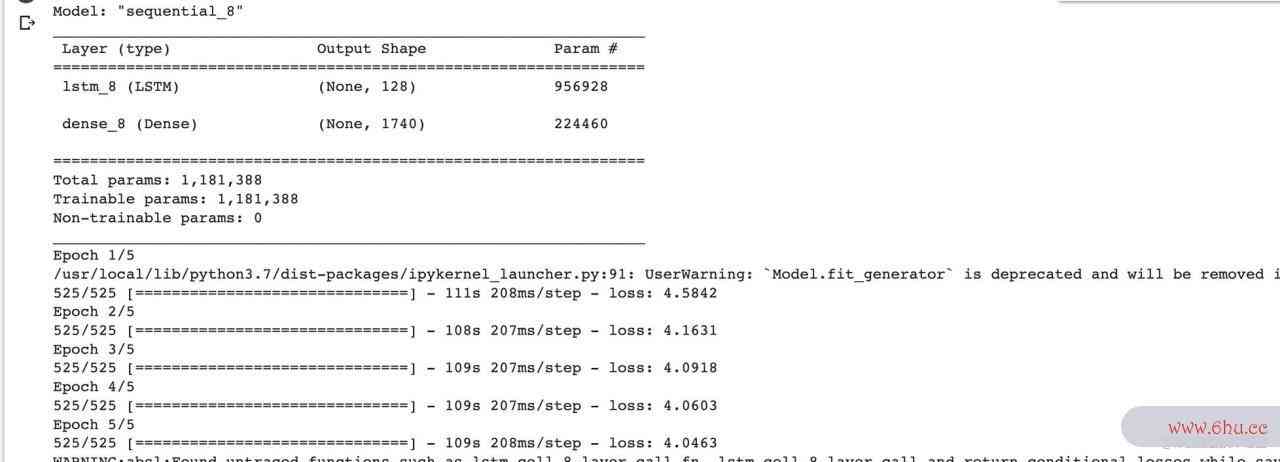

3.训练

def train():

tokenizer = NameTokenizer(path=PATH_DATA, required_gender=GENDER)

steps = int(math.floor(len(tokenizer.names) / BATCH_SIZE))

print("step:"+steps)

# # 构建模型

model = keras.Sequential([

keras.Input(shape=(MAX_LEN, tokenizer.vocab)),

# 第一个LSTM层,返回序列作为下一层的输入

layers.LSTM(STATE_DIM, dropout=DROP_OUT, return_sequences=False),

layers.Dense(tokenizer.vocab, activation='softmax')

])

model.summary()

optimizer = keras.optimizers.Adam(learning_rate=LEARNING_RATE)

model.compile(loss='categorical_crossentropy', optimizer=optimizer)

model.fit_generator(generate_data(tokenizer),

steps_per_epoch=steps, epochs=EPOCHS)

model.save("mymodel")

4.训练结果

5.预测

def sample(preds, temperature=1):

# helper function to sample an index from a probability array

preds = np.asarray(preds).astype("float64")

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

def generateNames(prefix,size):

tokenizer = NameTokenizer(path=PATH_DATA, required_gender=GENDER)

model = keras.models.load_model(PATH_MODEL)

preds = set()

tmp_generated = prefix

for char in tmp_generated:

if char not in tokenizer.token_id_dict:

print("字典中没有这个字")

return

sequence = ('{0:0<' + str(MAX_LEN) + '}').format(prefix).lower()

while len(preds) < size:

x_pre = np.zeros((1, MAX_LEN, tokenizer.vocab))

for t, char in enumerate(sequence):

x_pre[0, t, tokenizer.token_id_dict[char]] = 1

output = model.predict(x_pre, verbose=0)[0]

index = sample(output)

char = tokenizer.id_token_dict[index]

if(char == '0' or char == 'n'):

preds.add(tmp_generated)

tmp_generated = prefix

sequence = ('{0:0<' + str(MAX_LEN) + '}').format(prefix).lower()

else:

tmp_generated += char

sequence = (

'{0:0<' + str(MAX_LEN) + '}').format(tmp_generated).lower()

print(sequence)

if(len(sequence) > MAX_LEN):

tmp_generated = prefix

sequence = ('{0:0<' + str(MAX_LEN) + '}').format(prefix).lower()

return preds

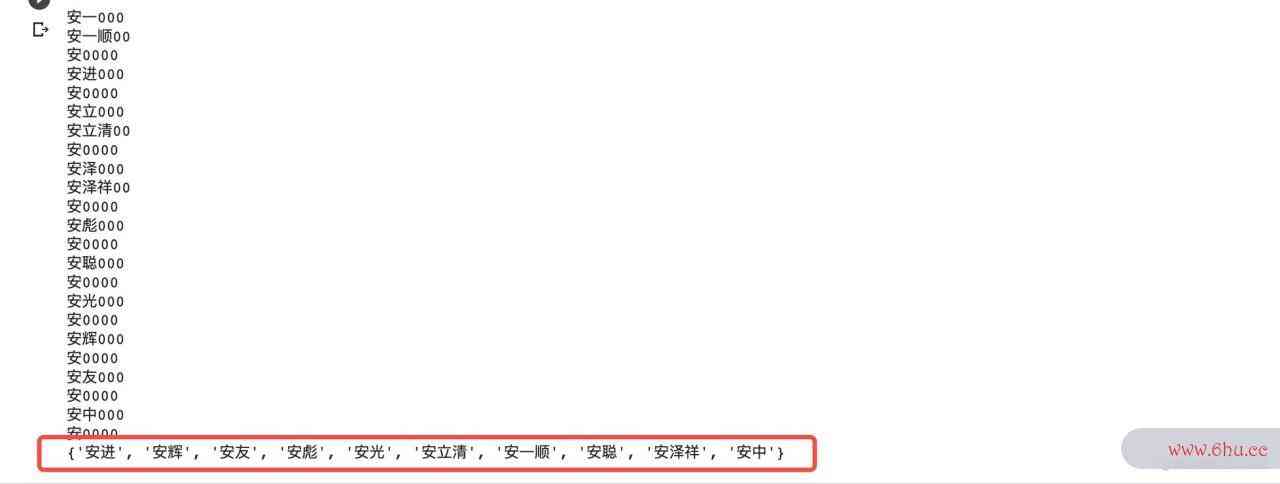

6.预测结果

完整代码已经上传到了github上,开源不易,希望多多点赞。

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。

评论(0)